SCENARIO 01 –

The Coefficient

Datacenter

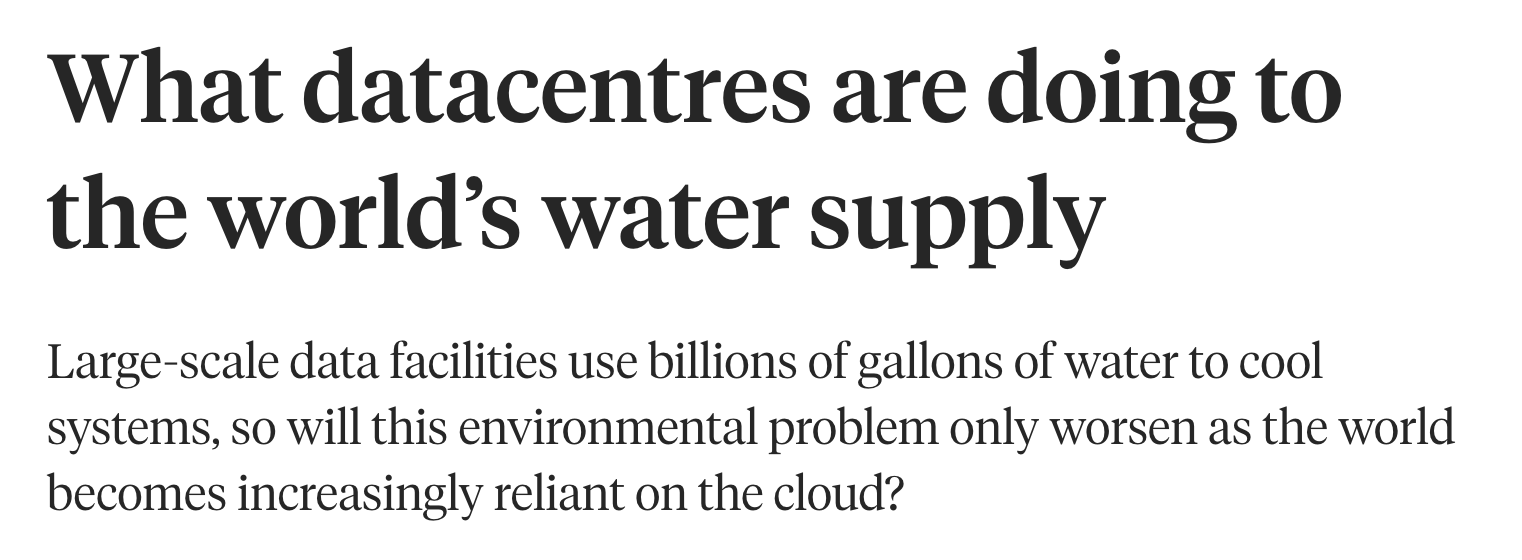

In this scenario, I consider how datacenters power the world wide web, including our on-demand streaming services, the digital economy, and social media platforms.

Context

Housing upwards of tens of thousands of machines running 24/7 with minimal downtime, it is estimated that around "17% of the global carbon footprint caused by technology is due to data centers." [1] The power consumption is less attributed to performing computing tasks, and is more due to the energy required to cool the servers, which generate a lot of heat.

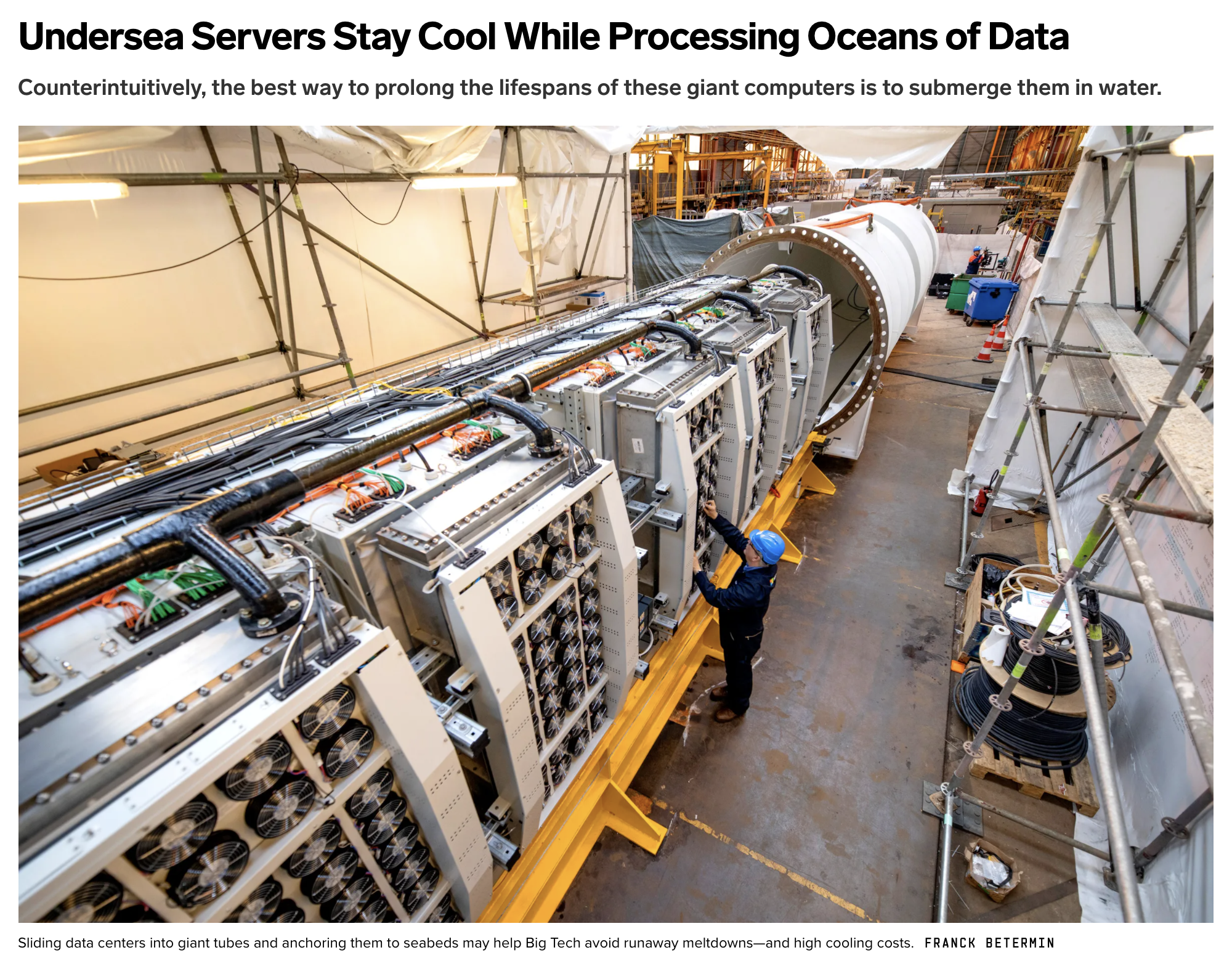

Over the last few years, data center providers and large tech corporations who house their own have looked to natural water resources as a cooling solution - by building data centers near rivers, pumping groundwater, and even submerging data centers in the ocean, taking root on the seafloor.

Over the last few years, data center providers and large tech corporations who house their own have looked to natural water resources as a cooling solution - by building data centers near rivers, pumping groundwater, and even submerging data centers in the ocean, taking root on the seafloor.

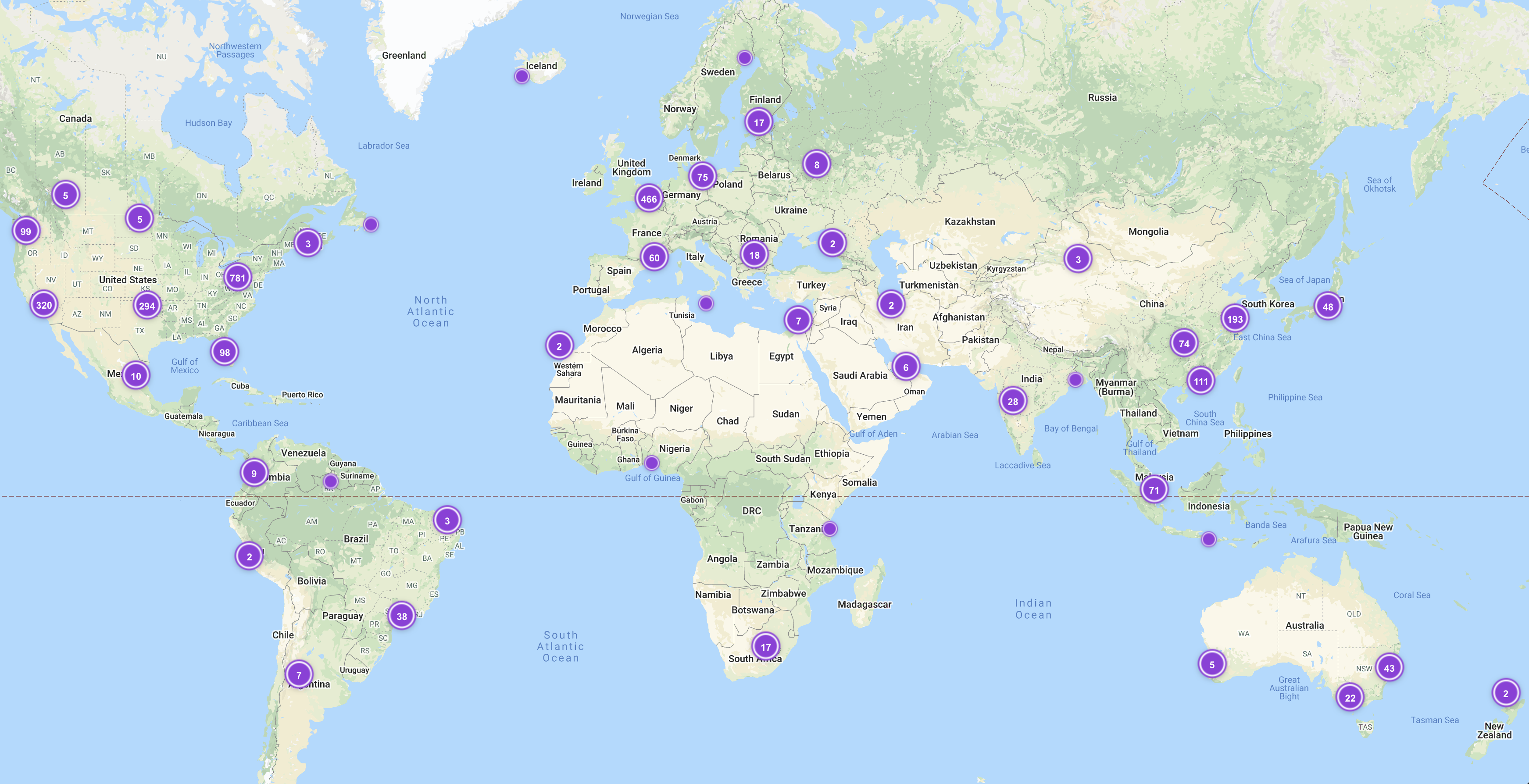

Estimated numbers of datacenters in locations across the world (source: datacenters.com)

Touted as cost-effective and sustainable, the use of natural water resources is actually a greenwashing tactic that diverts attention from the fact that datacenters are heating locations like oceans – and the long-term impacts on ecosystems are not well-understood.

Prototype Experiment

To explore a potential intervention in this scenario, I first tried to recreate this at a much smaller scale.

Using a Raspberry Pi running at high capacity, I used a cooling system that would circulate water that ran through an aluminum cooling block sitting on top of the Raspberry Pi’s CPU, and cycled the resulting hot water into a 10-gallon aquarium.

Over an extended period of time, I imagine that not only would the water source heat up enough such that the cooling system would be a lot less efficient, but that the livelihood of any ecosystem living in the aquarium would be threatened.

Using a Raspberry Pi running at high capacity, I used a cooling system that would circulate water that ran through an aluminum cooling block sitting on top of the Raspberry Pi’s CPU, and cycled the resulting hot water into a 10-gallon aquarium.

Over an extended period of time, I imagine that not only would the water source heat up enough such that the cooling system would be a lot less efficient, but that the livelihood of any ecosystem living in the aquarium would be threatened.

Prototype components + (water) flow diagram.

Prototype - Proof of Concept

Photos of the built prototype.

TESTING: 5 Minute Cycle

Screenshots from the log outputs of the Raspberry PI CPU temperatures (left) and aquarium temperatures (right) before and after running the system for 5 minutes.

As one can see, the temperature of the Raspberry PI jumped around ~50°F, resulting in a 1.25°F increase in water temperature for the 10 gallon aquarium.

As one can see, the temperature of the Raspberry PI jumped around ~50°F, resulting in a 1.25°F increase in water temperature for the 10 gallon aquarium.

Scaling Back Up - Environmental Sensing

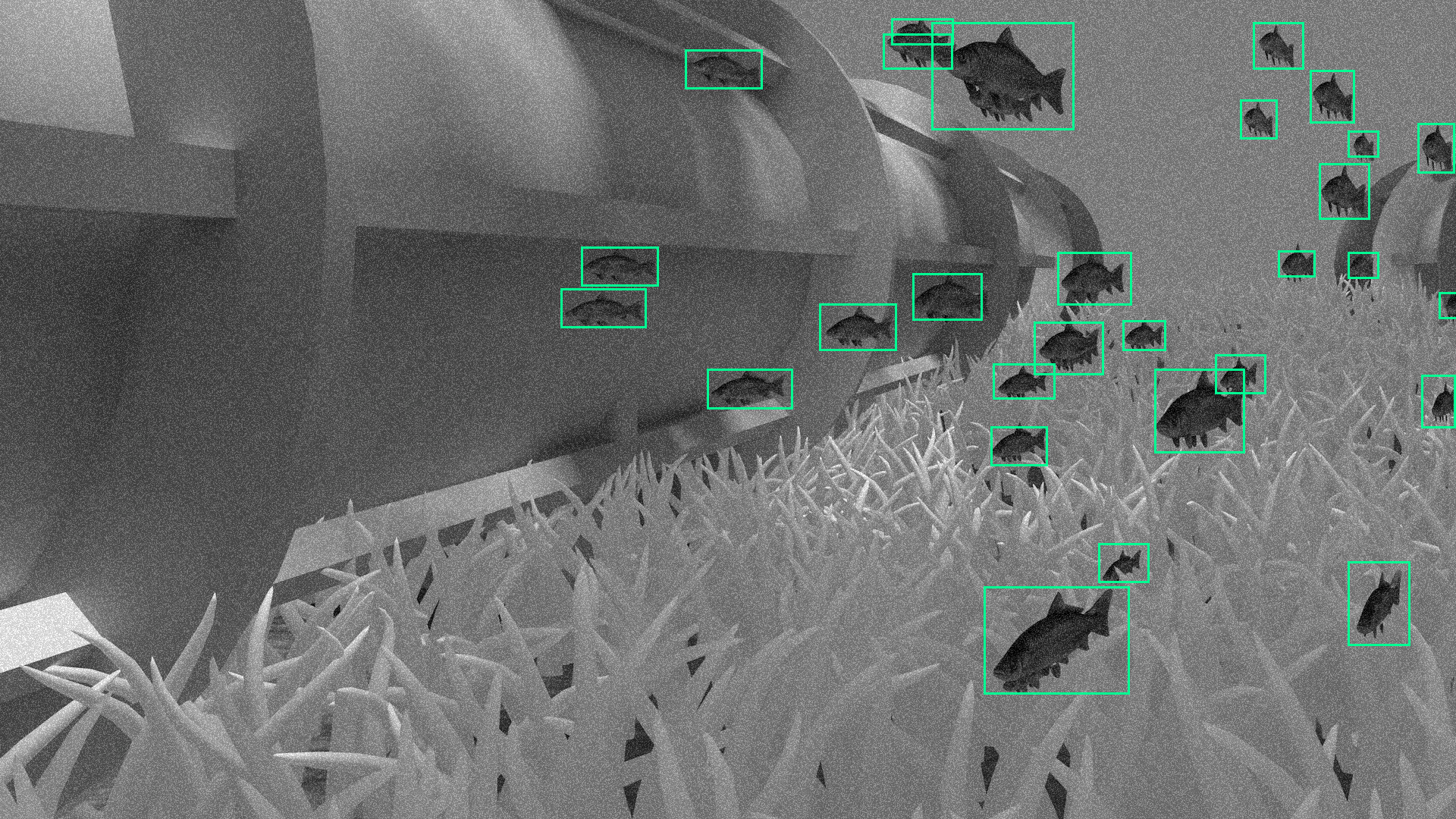

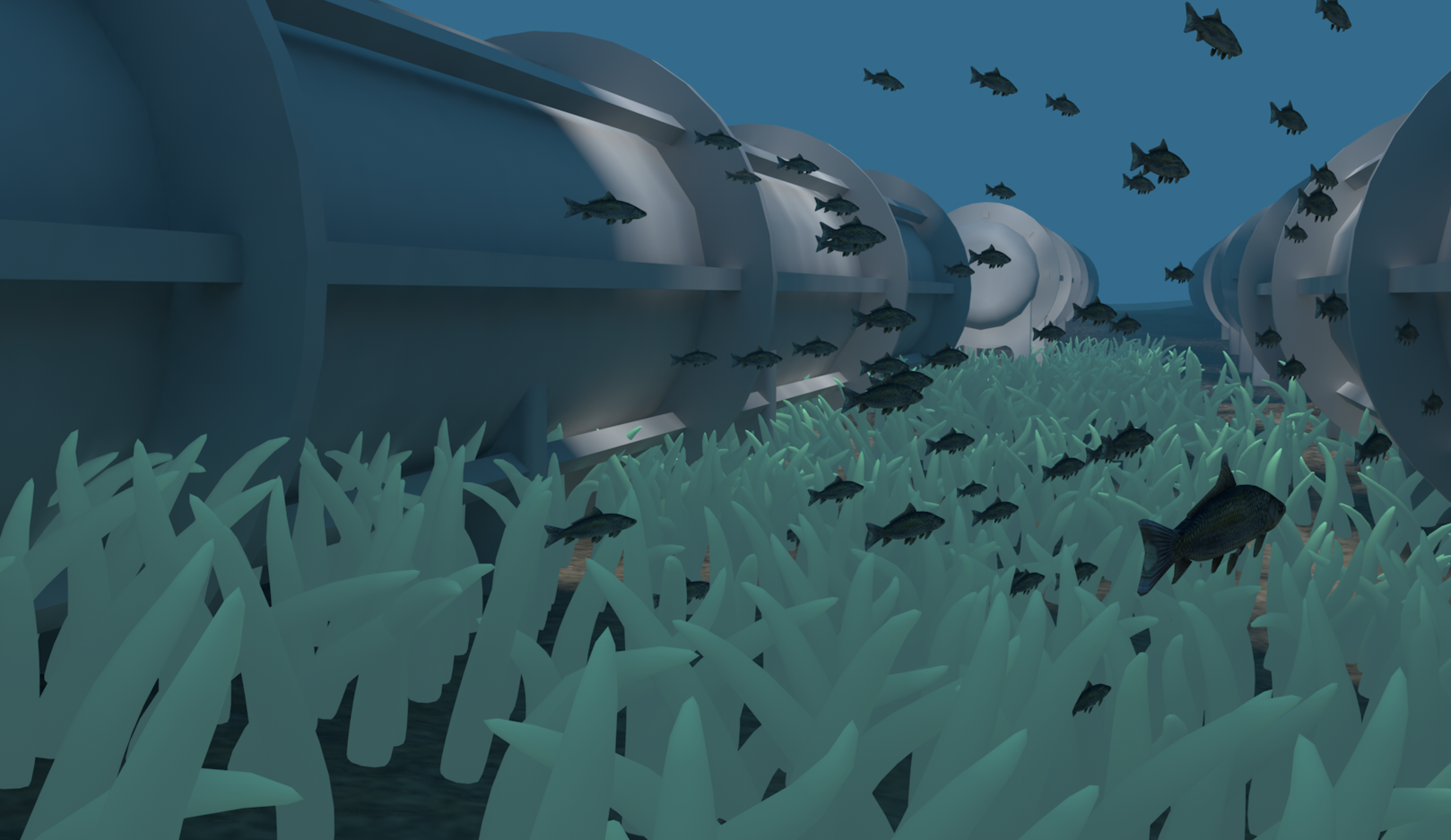

In attempting to scale this back up, I imagined a fictional corporation called Coefficient, whose sole data center is located at the bottom of a lake.

Coefficient’s servers operate based on the health conditions of the surrounding ecology it is situated within, calculating and displaying a score that also indicates the status of their server operations.

While the following examples show close monitoring of physically immediate entities, the broader ecosystem above water is also tracked periodically for a more holistic picture.

Coefficient’s servers operate based on the health conditions of the surrounding ecology it is situated within, calculating and displaying a score that also indicates the status of their server operations.

While the following examples show close monitoring of physically immediate entities, the broader ecosystem above water is also tracked periodically for a more holistic picture.

Environmental sensing and computer vision within machine learning methods are used, for example, to track the populations of fish that live and swim nearby, gauging health based on seasonal averages (left).

Other monitored properties include the level of heat stress on underwater flora, dissolved oxygen levels, ambient water temperatures at the lower and upper depth ranges, and pollution levels of lake sediment (right).

Other monitored properties include the level of heat stress on underwater flora, dissolved oxygen levels, ambient water temperatures at the lower and upper depth ranges, and pollution levels of lake sediment (right).

Coefficient Company - Landing Page

I intentionally designed this landing page with corporate aesthetics and language. In doing so, I asked myself whether I’d trust this kind of company – I wouldn’t.

Moving away from an ‘always on’ mentality

In this scenario, I was interested in thinking about the disconnection and gap between internet consumption and the downstream impact that has on the environment. Here, healthy environmental conditions mean operations are running normally.

In thinking about what happens when those conditions aren’t as healthy, then, it makes sense that data centers would reduce their output, no longer running 24/7 with minimal downtime. In the summertime, for instance, the water might already be at such a warm temperature that it would make the most ecological sense to slow operations as much as possible.

In thinking about what happens when those conditions aren’t as healthy, then, it makes sense that data centers would reduce their output, no longer running 24/7 with minimal downtime. In the summertime, for instance, the water might already be at such a warm temperature that it would make the most ecological sense to slow operations as much as possible.

If unhealthy environmental conditions meant shutting or slowing down datacenter outputs, how would humans have to adjust?

There’s been research done on the environmental toll of binging a tv show on netflix, along with the size of websites playing a role as well. Going back to one of my earlier questions in the fall, can we imagine shifting away from an always on mentality?

Then, following the chain a little bit, how would things like our streaming services and APIs respond? Could websites begin to ‘shut down’ or become lower resolution at points of the year, and would people be okay with this, knowing that it’s necessary for sustaining ecosystems?

It’s worth noting that service reductions already exist in different forms - for example, when paying for a lower tier phone service that throttles your data after you reach a certain cap.

The list of possible server error codes.

Now includes a fictional 512 code for Ecological Health Conditions - Service Unavailable indicator.

Now includes a fictional 512 code for Ecological Health Conditions - Service Unavailable indicator.